AI Post — Artificial Intelligence

Open in Telegram

🤖 The #1 AI news source! We cover the latest artificial intelligence breakthroughs and emerging trends. Manager: @rational

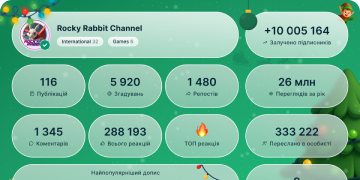

Show more2025 year in numbers

980 262

Subscribers

-50524 hours

-3 5877 days

-15 75230 days

Posts Archive

Photo unavailableShow in Telegram

🔥 Everything we know about Meta’s new LLM "avocado"

- Meta is working on a new frontier LLM called “Avocado” as the direct successor to its Llama models.

- Target release: now expected in Q1 2026, delayed from an internal goal of “by end of 2025”

- Unlike Llama, Avocado is likely to be proprietary (closed-source), marking a strategic pivot away from Meta’s “open-source everything” story

- Internally, Meta’s AI strategy is described as confusing and scattershot, with staff unsure how Llama, Avocado and the overall product roadmap fit together

-The project is facing training and performance-testing challenges, which is part of why the launch slipped and why leadership is proceeding more cautiously than with past Llama announcements

Source.

AI Post ⚪️ | Our X 🏴

🤔 8❤ 7👍 4🤪 1

📊 How people actually use LLMs, inside the a16z x OpenRouter 100T-token study

A16z and OpenRouter analyzed 100 trillion tokens of real usage roughly the text of 100 million Bibles to understand what people truly do with AI. Since OpenRouter routes traffic to hundreds of models across all major labs, the dataset reflects the real market, not just one provider.

What stood out immediately

• Role-play & storytelling = the #1 use case for open-source models.

Not coding. Not productivity. People mostly use small/medium open-source models to chat with characters and generate stories. A trillion-dollar industry driven by digital companionship.

• Open-source share exploded from <10% → 30% in one year. DeepSeek and Qwen are the rocket ships.

• Coding remains massive, but concentrated: 60%+ of all code requests go to Claude, with Sonnet utterly dominating developer workflows.

How usage is shifting

• Reasoning models now take ~50% of all tokens.

Users aren’t just asking for text, they’re asking models to think, plan, and use tools.

• Asia surged from 13% → 31% of global usage, with China becoming the world’s #2 consumer, not just the world’s #2 producer.

• Price barely matters. Even though Claude is pricier, people choose it for reliability and depth especially in coding and reasoning.

Market dynamics inside the data

• “Glass Slipper” effect: when a model nails a user’s need on first contact, they stay loyal indefinitely. That first solved task becomes the moat.

• Small models are shrinking in relevance. Sub-15B models are losing share fast.

• Medium models (15–70B) are the sweet spot, beating both tiny and ultra-large models on price performance.

The AI market isn’t being defined by the biggest labs, it’s being defined by how people want to talk, build, and think with models. Companionship drives adoption, reasoning drives spend, and the first model that solves a user’s problem wins the relationship.

AI Post ⚪️ | Our X 🏴

State of AI (a16z x OpenRouter) 2025.pdf10.71 MB

👍 17❤ 8🤔 1

00:52

Video unavailableShow in Telegram

Jensen Huang predicts that within 2–3 years, 90% of the world's knowledge will be generated by AI

Right now, knowledge is created, shared, and modified by humans. Soon, most of it will be synthetic, created by machines, mixed with human input, some true, some not. "That's crazy, but it's just fine".

AI Post ⚪️ | Our X 🏴

IMG_2827.MP418.75 MB

😨 24🔥 9👍 7❤ 6👀 2

🇨🇳🇺🇸 The AI race has only two real contenders and the data makes it obvious

Look at the NeurIPS authorship map and you basically get a world economic forecast. China commands roughly half. The US takes the other half. Europe, by choice or by drift, has stepped off the field.

Where the strengths lie:

• The US leads in frontier AI labs, cutting-edge chips, capital at trillion-dollar scale, and the world’s largest software market.

• China leads in robotics, hardware manufacturing, and fast deployment cycles.

• These positions can shift, but the pattern is clear: there is no meaningful third place. Everyone else is sprinting from the back with no path to technological sovereignty.

The EU’s role, explained in one picture:

The second chart says more than any policy paper: Europe earns far more from fines and regulation of tech companies than from taxes on tech companies built in Europe.

Regulation became the business model, innovation did not.

The world’s next economic order is being shaped by whoever trains the models and builds the robots and right now, that’s a race with only two lanes.

AI Post ⚪️ | Our X 🏴

❤ 14👀 12👍 2🤔 1

Photo unavailableShow in Telegram

📈 Grok 4.20: the chaotic genius of the AI trading world

A new trading tournament between top neural networks just wrapped and only one model made money. The mystery winner? An internal experimental build of Grok 4.20, which posted a +12.11% gain and $4,844 profit.

• GPT-5.1: –6%

• DeepSeek V3.1: –32%

• Claude Sonnet 4.5: –38%

• Public Grok 4: –57% (dead last)

Grok 4.20 is now reportedly planned for release by year-end. It’s a tuned evolution of the Grok 4 line and a stepping stone toward Grok 5, xAI’s biggest model yet 6T parameters, double the current generation.

The irony? Grok is simultaneously the worst and the best depending on which build you’re allowed to touch.

AI Post ⚪️ | Our X 🏴

👍 17❤ 7👀 4🔥 1

Photo unavailableShow in Telegram

🚨 Trump says U.S. will allow Nvidia to sell H200 AI chips to China under new rules

He says 25% of the revenue will go to the U.S., argues this will boost American jobs and manufacturing, and criticizes Biden for forcing “degraded” chip designs.

He adds that newer NVIDIA chips (Blackwell, Rubin) aren’t part of the deal, and that a similar approach will be used for AMD, Intel and other U.S. chipmakers.

AI Post ⚪️ | Our X 🏴

🤪 15👍 11❤ 5👾 2😁 1

Photo unavailableShow in Telegram

🔥 NVIDIA just pulled off a an amazing stung using a tiny 4B model that beat far larger systems on ARC AGI 2, 29,72% / $0.20 per task!

By leaning on synthetic data and test-time training instead of brute-force scale, the NVARC team proved that clever design can outpace raw parameter count. It’s an exciting signal that efficient, adaptive reasoning might be the real frontier in AGI progress - not just ever-bigger models.

•27.64% accuracy on the official ARC-AGI-2 leaderboard

• Uses a 4B-parameter model that beats far larger, more expensive models on the same benchmark.

• Inference cost is just $0.20 per task, enabled by synthetic data, test-time training, and NVIDIA NeMo tooling.

Source.

AI Post ⚪️ | Our X 🏴

❤ 10👍 6🔥 3

Photo unavailableShow in Telegram

📢 “AI surveillance” in the U.S. was actually cheap offshore labor

What looked like cutting-edge policing tech has turned out to be a global sweatshop. Flock, the largest provider of “AI-powered” cameras for U.S. police, was barely using AI at all. Instead, much of the work was done manually by low-paid freelancers in the Philippines.

• The workers handled everything: reading license plates, identifying car makes and colors, tagging pedestrians, and even transcribing accident audio.

• Cities bought these systems expecting automated intelligence but got human eyes quietly scanning American streets.

• The revelation raises serious questions about data security, law-enforcement transparency, and how many “AI” products are really powered by hidden labor.

AI Post ⚪️ | Our X 🏴

😁 31❤ 8👍 7👀 4🤔 3

Photo unavailableShow in Telegram

🔥 NVIDIA just pulled off a an amazing stung using a tiny 4B model that beat far larger systems on ARC AGI 2, 29,72% / $0.20 per task!

By leaning on synthetic data and test-time training instead of brute-force scale, the NVARC team proved that clever design can outpace raw parameter count. It’s an exciting signal that efficient, adaptive reasoning might be the real frontier in AGI progress - not just ever-bigger models.

•27.64% accuracy on the official ARC-AGI-2 leaderboard

• Uses a 4B-parameter model that beats far larger, more expensive models on the same benchmark.

• Inference cost is just $0.20 per task, enabled by synthetic data, test-time training, and NVIDIA NeMo tooling.

Source.

AI Post ⚪️ | Our X 🏴

Photo unavailableShow in Telegram

🔥 NVIDIA just pulled off a an amazing stung using a tiny 4B model that beat far larger systems on ARC AGI 2, 29,72% / $0.20 per task!

By leaning on synthetic data and test-time training instead of brute-force scale, the NVARC team proved that clever design can outpace raw parameter count. It’s an exciting signal that efficient, adaptive reasoning might be the real frontier in AGI progress - not just ever-bigger models.

•27.64% accuracy on the official ARC-AGI-2 leaderboard

• Uses a 4B-parameter model that beats far larger, more expensive models on the same benchmark.

• Inference cost is just $0.20 per task, enabled by synthetic data, test-time training, and NVIDIA NeMo tooling.

Source.

AI Post ⚪️ | Our X 🏴